Elevate Your Rankings: Fix These SEO Mistakes

SEO stands out among various online marketing strategies as a highly effective approach to boost website traffic, enhance conversion rates, and drive sales.

Particularly beneficial for websites aiming to dominate search engine rankings, SEO focuses on generating organic traffic to foster website growth and strengthens business branding.

Google’s algorithm systems have evolved to become more intelligent, enhancing its data system to deliver precise, high-quality, and pertinent information to users. Consequently, implementing right SEO strategies enables websites to enhance their visibility for both search engines and users.

However, if you do mistakes in SEO strategies, your business could be ruined. So it is crucial to understand each mistakes and make the necessary improvements to your SEO campaign in order to stay on the right track.

Fix These SEO Errors

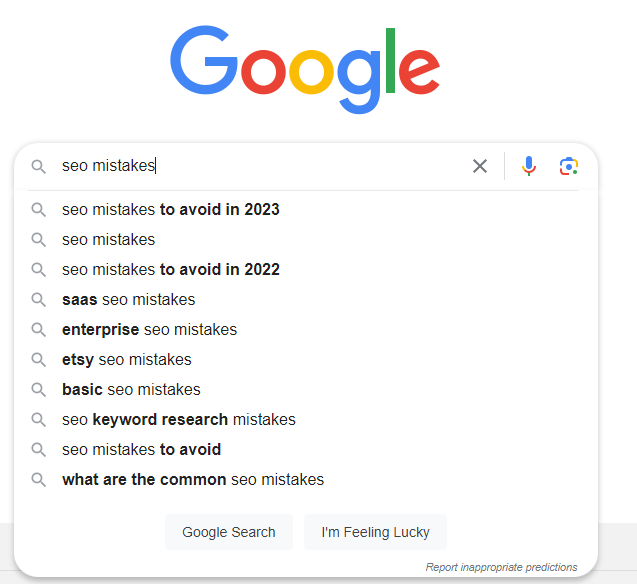

Ignore Keyword Research

Keywords are the foundation of SEO. Right keywords can drive traffics to your website. Failing to conduct keyword research can lead low quality contents, reducing the chance of higher ranking in search results.

That’s why it’s very important for a website to identify the relevant, high traffic and low competitive keywords which can lead targeted traffics from organic search.

Creating Low-Quality Contents

Contents are still king in digital marketing realm. High quality contents always give a positive message to the audience which is helpful for increase the trust level of your brand.

But you know, low quality, thin and duplicate contents not only hamper your SEO efforts but also frustrates your visitors. Your website can get penalty from search engines if it contains a lot of duplicate and thin content pages.

Through these five methods, you can assess the quality of content:

- Identifying duplicate content concerns.

- Evaluating content pages lacking value.

- Assessing readability challenges.

- Checking for keyword relevance.

- Addressing the problem of doorway pages.

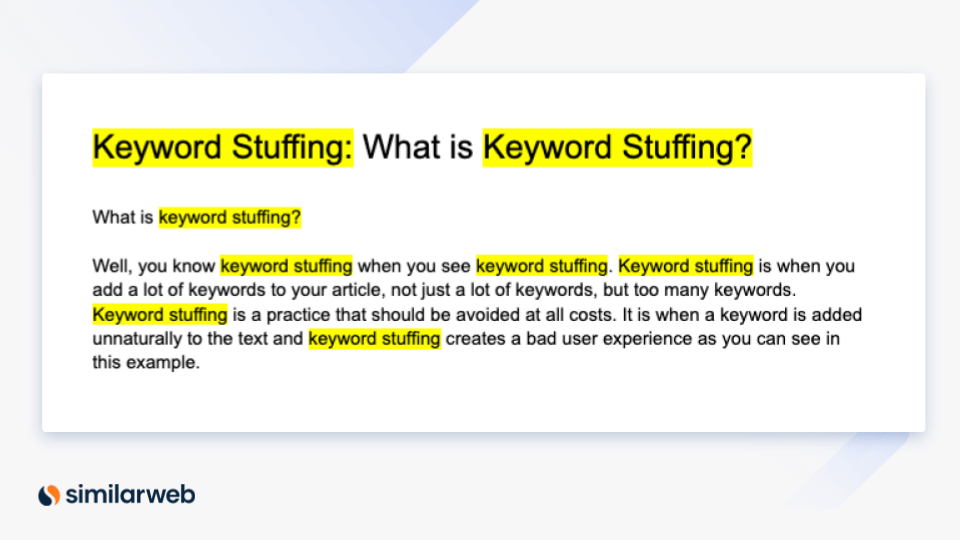

Practice Keyword Stuffing

Engaging in the practice of keyword stuffing is ill-advised for individuals seeking quick overnight rankings. This tactic falls among the array of inadvisable strategies that attempt to expedite SEO results. The overuse of this approach can lead to a bad impact on both content readability and overall quality.

Google, a prominent authority in the search engine algorithms, views keyword stuffing as a spammy technique. Consequently, it is of paramount importance to steer clear of such practices in order to lead a successful content marketing strategy.

Title and Meta Description Issues

Optimizing the title and meta description is crucial for search engine visibility. These tags appear directly in search results and greatly influence click-through rates (CTR). Users rely on them to decide whether to visit a page. Even with great content, if the title and meta description are irrelevant or poorly structured, efforts might go to waste.

Before publishing, ensure three key elements:

- Relevant Title: The title should align with the content and be around 60 characters in length.

- Effective Meta Description: Craft a meta description under 155 characters that includes primary keywords.

- Keyword in URL: Incorporating the keyword into the URL boosts optimization.

Additionally, maintain uniqueness for each page’s title and meta description to avoid harming search engine rankings through duplicated metadata.

Anchor Text Over-Optimization

Excessively focusing on keyword-rich anchor text doesn’t actually lead to better rankings; instead, it can even harm your keyword’s performance in search results. This was made evident by the Penguin update, which caused numerous websites to lose their rankings and traffic due to an excessive use of keyword-matched anchor text.

To avoid falling into this trap, a simple strategy is to diversify your anchor text usage, maintaining a healthy balance. This approach prevents your anchor text from appearing spammy and over-optimized.

Here are a few types of anchor text you can employ, along with their corresponding keyword terms:

- LSI and Partial Match Anchors: Incorporate variations and related terms of your target keywords to create a more natural and contextual anchor text.

- Brand Anchor Text: Use your brand name as the anchor text. This helps build brand recognition and authority.

- Generic Anchors: Utilize generic phrases as anchors, such as “click here,” “learn more,” or “check this out.” These provide a balanced and user-friendly anchor profile.

- Naked URLs: Simply use the URL itself as the anchor text. This straightforward approach adds authenticity to your link profile.

- Exact Match Anchors: While sparingly using exact match anchor text can be effective, be cautious not to overuse it, as it can trigger algorithmic penalties.

By thoughtfully integrating these anchor text approaches, you can enhance your SEO efforts without triggering any negative impacts. Remember, the key is diversity and moderation in anchor text usage.

Errors in Robots.txt

Robots.txt is a powerful tool in your SEO toolbox, allowing you to manage how search engines index your site. Its role is to guide crawlers and bots on what pages to index and which to leave out.

Crafting this file demands precision. Even minor errors can disrupt website indexing, potentially leading to unfavorable indexing outcomes.

To safeguard your indexing efforts, always validate your robots.txt file. Google Search Console provides a dedicated feature – Robots.txt Tester (you have to switch older search console version) – designed to help you identify and rectify any errors or issues. This tool assesses the file’s contents, ensuring your indexing status remains intact.

Alternatively, you can direct access to your robots.txt file by following the URL structure:

https://www.example.com/robots.txtYou only need a robots.txt file if your site includes content that you don’t want Google or other search engines to index.”

Google Webmaster – Search Engine Guidelines

Here are 5 common mistakes to avoid:

- Placing the File Incorrectly: The robots.txt file must be in your website’s root directory for proper function.

- Blocking Homepage Indexing: Be cautious not to disallow indexing of your homepage unintentionally.

- Case Sensitivity Ignored: Robots.txt is case-sensitive. Be consistent to prevent mishaps.

- Multiple Rules on One Line: Avoid stacking multiple rules in a single line; it could lead to confusion.

- Unusual Characters in Rules: Stick to standard characters to ensure rules are interpreted correctly.

Robots.txt empowers you to exert control over what search engines crawl. If there are certain pages or backend sections you wish to keep off-limits, this is your tool. Achieve faster indexing and improved search results by creating a precise robots.txt file tailored to your website’s needs.

Wrapping Up

Mapping out an effective SEO strategy is a crucial step in achieving your goals with the least hassle. Understanding typical SEO errors can help you stay on the right track.